The digital landscape is undergoing a profound and accelerating shift, driven by the emergence of sophisticated Large Language Models (LLMs) and advanced search algorithms. Today, achieving visibility means optimizing not only for traditional search engine crawlers but also for the direct retrieval capabilities of AI systems like Google Gemini, Perplexity, and others. A robust on-page SEO strategy is no longer a simple exercise in keyword placement; it is a complex, holistic discipline that ensures technical accessibility, content excellence, and user experience.

This definitive 49-point checklist serves as a comprehensive roadmap, guiding site owners, marketers, and SEO professionals through every critical element required to secure top performance in both conventional search results and AI-driven retrieval contexts. By meticulously addressing these criteria, a web page can establish the strong technical foundation and high-quality relevance necessary to succeed in this new era of information discovery.

⚙️ Technical and Indexing Foundations (The Critical First Steps)

A page’s foundation dictates its eligibility for ranking or retrieval. Before any content analysis can occur, the technical accessibility must be verified. This section ensures the site is properly communicating with all major crawlers.

1. Crawler Access: The robots.txt Verification

The very first check involves the robots.txt file, which is the gatekeeper of your site. It instructs search engine and AI crawlers on which pages they are allowed (or disallowed) to access.

- Check: Is the specific URL allowed for standard search engine bots (Googlebot and Bingbot)?

- Verification: Use a developer tool or a browser extension to inspect the page’s compliance and confirm no blanket

Disallowdirective blocks the URL path.

- Verification: Use a developer tool or a browser extension to inspect the page’s compliance and confirm no blanket

- Check: Is the URL allowed for AI crawlers (GPTBot, Google-Extended, PerplexityBot, etc.)?

- Rationale: Blocking these bots directly prevents your content from being used as a source for LLM answers, significantly impacting your visibility in AI summaries and direct retrieval queries. The modern SEO mandate is to be included in the knowledge base, not excluded.

- Optimal Setup: The

robots.txtshould contain minimal or noDisallowrules that affect the page’s core content, ensuring a clean “Pass” for all essential crawlers.

2. Indexability Directives

Even if the robots.txt allows access, the page itself might contain meta-directives blocking its inclusion in the index.

- Check: Does the page use a

noindexdirective?- Verification: Inspect the

robotstag section using a detailed browser extension. If the directive isindex, followor if the directive is completely blank (which defaults to index, follow), the page is indexable. Any presence ofnoindexis an immediate fail for a target ranking page.

- Verification: Inspect the

3. HTTP Status Code Verification

The page must be accessible and correctly served by the server.

- Check: Does the page return a 200 HTTP status code?

- Rationale: A 200 status indicates the page is working and accessible. Any other code (e.g., 404 Not Found, 301 Redirect, 5xx Server Error) means the page cannot be indexed or retrieved successfully. Use an external tool (like an HTTP status code checker) to confirm the 200 status across various geographic and network checks.

4. LLM Direct Retrieval Capability

AI systems have specialized retrieval protocols. You must confirm your content is accessible through these channels.

- Check: Can the page be fetched directly by major LLMs (e.g., ChatGPT’s browsing model)?

- Verification: Test the raw URL within the LLM interface, asking it to summarize or provide key details about the page. The LLM must be able to successfully browse and extract information, confirming that the page is not blocked by non-traditional security measures or unusual framing. This ensures the content is available for direct answer generation, a crucial component of AI Search Engine Optimization (AI SEO).

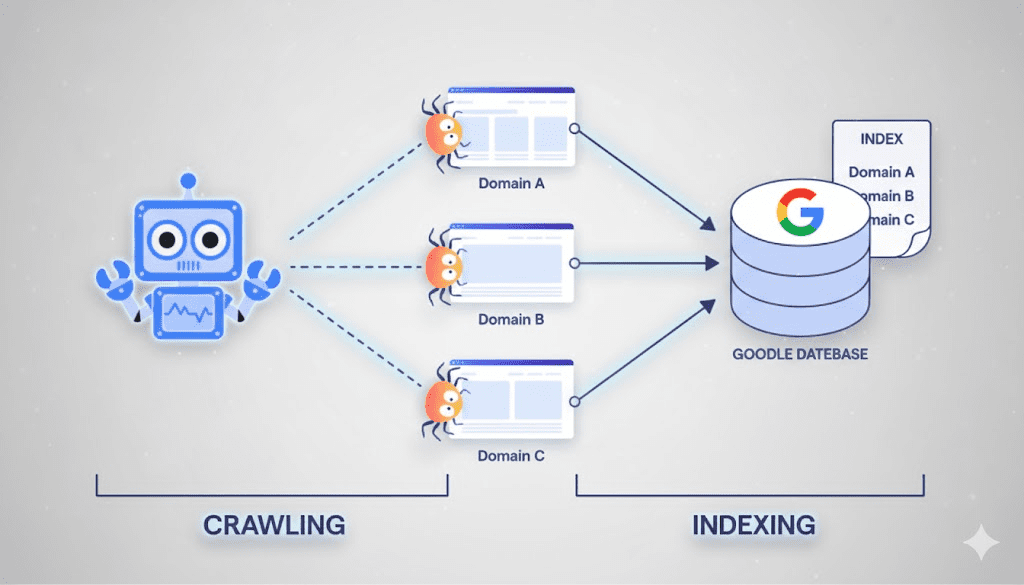

5. Indexing Across Key Search Engines

AI models often pull from multiple search indices for authoritative information.

- Check: Is the page successfully indexed in Google?

- Rationale: This is essential for standard ranking and directly influences Google’s AI model (Gemini).

- Check: Is the page successfully indexed in Bing?

- Rationale: Bing is a primary source for ChatGPT and Perplexity retrieval.

- Check: Is the page successfully indexed in Brave?

- Rationale: There is evidence that search models like Claude may use Brave Search or similar independent indices for their source retrieval.

- Verification: Perform a simple

site:URLsearch or a raw URL search across all three engines.

💾 Structure and Code Compliance

The format and structure of the underlying code dramatically affect how crawlers and LLMs process and understand the content.

6. HTML Structure and Content Visibility

LLMs and search engines prefer clean, readable HTML. Pages built heavily on complex, client-side rendering (like JavaScript) without proper server-side rendering can impede retrieval.

- Check: Is the page primarily built using HTML?

- Rationale: Clean HTML is easily parsed, making the text instantly available for retrieval. JavaScript-heavy pages may not be fully rendered by retrieval bots, leading to a failure to gather context.

- Check: Is the core content server-rendered and visible in the page source?

- Verification: View the page source. Key elements like the H1 tag and main body paragraph tags (

<p>) should be visible immediately without requiring JavaScript execution. This confirms the page is readily understood by the first pass of any retrieval system.

- Verification: View the page source. Key elements like the H1 tag and main body paragraph tags (

7. Canonical URL Setting

A canonical tag prevents duplicate content issues, ensuring link equity is consolidated to the preferred URL.

- Check: Is a canonical URL set?

- Verification: The canonical tag should be **self-referencing**, pointing to the current, official URL of the page. This is standard practice for healthy SEO and helps eliminate confusion for both crawlers and LLMs.

🎯 Intent and Relevance (The SERP Alignment)

Content must not only be technically sound but must also align perfectly with user expectations as defined by the current Search Engine Results Page (SERP).

8. Matching Dominant Search Intent

The single most critical factor for ranking is matching the user’s intent as demonstrated by the top-ranking pages.

- Check: Does the page’s structure and purpose **match the dominant intent** shown in the live SERP for the target keyword?

- Analysis: Study the top 10 results. Are they primarily **Commercial** (transactional, e-commerce, service pages)? Are they **Informational** (blog posts, guides, tutorials)? Or are they **Navigational** (homepage, brand search)? If the SERP is 99% commercial service pages, your page must also be a commercial service page. Trying to rank an informational blog post against commercial intent is a common, high-risk mistake. The safest strategy is to **copy the intent** of the top-ranking results.

9. Qualification for SERP Features

If the SERP displays special features (e.g., Local Packs, People Also Ask, Knowledge Panels), the page must include the necessary elements to qualify for those features.

- Check: Does the page include elements needed to qualify for existing SERP features?

- Example (Local Pack): If a **Local Pack** is present, the business must have an optimized **Google Business Profile**, a local address, and an active strategy for acquiring reviews. This is a prerequisite for local visibility, often overriding traditional organic ranking factors.

10. Content Freshness and Currency

LLMs and Google strongly favor content that is current and recently updated, particularly for time-sensitive or rapidly changing topics.

- Check: Does the page display a **visible publish date** and a **clearly labeled last updated** date? (Primarily for informational content).

- Check: Is the content actually current, reflecting any recent changes in the industry, laws, or product status?

- Code Audit: For commercial pages lacking a visible date, audit the page source for outdated year mentions (e.g., **2016** or **2020**). The presence of old dates signals to crawlers and LLMs that the content may be stale, which can significantly damage performance. **Regularly refreshing and re-publishing pages** is crucial for maintaining a perception of freshness.

📏 Readability and User Experience (UX/CRO)

While SEO is often perceived as a technical pursuit, on-page optimization is inextricably linked to user experience and conversion rate optimization (CRO). Pages that frustrate users will fail to convert, leading to high bounce rates and poor core web vital signals, which indirectly harm SEO performance.

11. Scannability and “Too Long; Didn’t Read” (TL;DR)

LLMs retrieve content in **chunks**. Clear structure and scannability make content easier for both humans and AI to parse and extract key information.

- Check: Is the content highly **scannable**?

- Check: Does the page include a **short verdict box** or **TL;DR summary** at the top? (Highly recommended for dense informational content).

- Rationale: This allows the LLM to instantly grab the core answer, satisfying the query quickly and ensuring the page’s main point is retrieved accurately.

12. Design and Conversion Rate Optimization (CRO)

Poor design choices can confuse the user, diminish trust, and lead to lost conversions—the ultimate goal of a transactional page.

- Check: Are design elements (colors, fonts, headings) consistent and non-competitive with links?

- Best Practice: Avoid using the **universal link color (blue)** for non-linked headings or body text. Let links look like links.

- Check: Is the primary Call to Action (CTA)—e.g., a phone number—**prominent and visually emphasized**?

- Rationale: For transactional pages, maximizing goal completions is the **number one objective**, with SEO being the second. A muted or hidden CTA reduces the likelihood of the desired action.

- Check: Is the **primary headline above the fold** (visible immediately without scrolling)?

- UX 101: The headline tells the user where they are and what the page is about. Placing it too far down confuses the user and leads to immediate abandonment.

13. Word Count Sufficiency and Brevity

Word count is not a direct ranking factor, but context is.

- Check: Is the word count **sufficient to satisfy search intent**?

- Verification: Compare the page’s word count to the **median word count** of the top 10 competitors (excluding extreme outliers).

- Check: Is the content concise and **without unnecessary padding or fluff**?

- Strategy: For commercial/transactional pages, aim for the *low end* of the competitive word count range. Focus on **brevity** and conversions. Excessive word count (e.g., 4,300 words when the median is 1,500) often signals manipulation attempts rather than genuine information gain, and can overwhelm the user. The goal is substance, not quantity.

14. Basic Grammar and Spelling

Errors erode trust and may signal a lack of quality control to both users and sophisticated crawlers.

- Check: Is the page free of **basic grammatical and spelling errors**?

- Tip: Use AI tools integrated into content optimizers to quickly perform a full spelling and grammar analysis, eliminating the need for separate external services.

15. Heading Hierarchy and Logic

The heading structure provides a roadmap of the content for crawlers and LLMs.

- Check: Is the **heading hierarchy logical** (

H1followed by nestedH2and thenH3)?- Verification: Use a browser extension or content brief tool to inspect the heading structure. A structure that is **”H2-heavy”** (using too many

H2tags sequentially) can confuse the content’s thematic flow. - Goal: The structure should be logical, with short paragraphs and, where appropriate, use **list** or **table** formats for easy data extraction.

- Verification: Use a browser extension or content brief tool to inspect the heading structure. A structure that is **”H2-heavy”** (using too many

16. Page Loading Speed (Core Web Vitals)

Speed is a confirmed ranking factor, especially on mobile devices.

- Check: What is the page speed score on **Google PageSpeed Insights** for **mobile** and **desktop**?

- Focus: **Mobile speed** is often the most critical, particularly for B2C or local service-based traffic. A low mobile score (e.g., 57/100) indicates significant optimization is needed.

17. Mobile Friendliness

The page must render perfectly and intuitively on smaller screens.

- Check: Is the page **mobile-friendly**?

- Verification: Use a mobile device to confirm that the layout is clean, text is readable without zooming, and all CTAs are easily clickable.

18. Reading Level Appropriateness

Content must be easily digestible by the target audience.

- Check: Is the **reading level appropriate** for the target audience and general internet consumption?

- Best Practice: Most online content should aim for a **high school to elementary reading level**. Technical content (e.g., legal or medical) often fails this check, using language that is too complex (e.g., “College Graduate” level), leading to confusion and lower conversion rates. Simplifying the language is key to better engagement and comprehension.

🔑 Keyword and Topic Coverage (The Relevance Score)

This is the classic, foundational on-page SEO layer, now enhanced with a focus on comprehensive topic coverage for LLM context.

19. Primary Keyword Placement

Strategic placement of the target keyword is essential for establishing immediate relevance.

- Check: Does the **primary keyword** (including local modifier, if applicable) appear in the **URL**?

- Example: For “Chicago truck accident lawyer,” the URL should include **

/chicago-truck-accident-lawyer/**.

- Example: For “Chicago truck accident lawyer,” the URL should include **

- Check: Does the primary keyword appear in the **Title Tag**?

- Check: Does the primary keyword appear in the **Meta Description**?

- Check: Does the primary keyword appear in the **H1 Tag**?

- Check: Does the primary keyword appear in the **first 100 words** of the body copy?

- Check: Are **keyword variations** included in the **H2 Tags**?

20. Descriptive and Engaging Headings

Headings should be short, relevant, and encourage the user to continue reading.

- Check: Are the headings **descriptive** and do they accurately represent the section content?

21. Comprehensive Topic Coverage (The LLM Requirement)

This is the most important factor in modern content optimization. LLMs need a full, 360-degree understanding of the topic to synthesize a complete and authoritative answer.

- Check: Does the content **cover all relevant topics** used by top-ranking competitors?

- Verification: Use a content optimization tool to compare the page’s coverage against competitors and identify **”unused topics”**. A high volume of unused, relevant topics is a clear signal that the content needs a complete overhaul. A page that misses core sub-topics (e.g., “statute of limitations,” “types of truck accidents,” “insurance claims”) will be perceived as incomplete and less authoritative by both Google and LLMs.

💰 Conversion and Goal Completion (The Business Outcome)

For any page with a transactional goal, successful on-page SEO must culminate in a smooth and reliable goal completion process.

22. Functional Calls to Action (CTAs)

All mechanisms designed to convert the user must be fully tested.

- Check: Are the **phone number CTAs actually clickable** on mobile devices? (A crucial check for local lead generation).

- Check: Does the lead generation form (e.g., “Free Case Review”) **work correctly**?

- Verification: Use a testing email service (like Mailsac or a similar email testing tool) to submit the form and confirm that the lead is successfully received by the business.

23. Post-Submission Funnel and Trust Building

The conversion process does not end with a form submission; the post-submission experience is vital for securing the deal.

- Check: After form submission, does the user land on a **Thank You Page** instead of simply seeing a generic message?

- Best Practice: The Thank You Page should include a **short video** from the brand/founder, **setting expectations** for the follow-up process and reinforcing the brand’s value.

- Check: Does the prospect receive an **immediate email confirmation** of their submission?

- Rationale: This builds trust and assures the user the submission was successful. This basic step is frequently overlooked but critical for the conversion pipeline.

Conclusion: On-Page as a Force Multiplier

The 49-point On-Page SEO Checklist represents more than just a list of optimizations; it is a blueprint for building a **technically resilient, user-focused, and AI-compatible** web asset.

The analysis of a typical underperforming page reveals that the most significant failures are often not in simple keyword density, but in the critical areas that affect technical accessibility, user trust, and complete topic coverage. Pages that are built upon outdated code, suffer from low mobile speeds, confuse users with poor design, and neglect crucial conversion funnels cannot compete, regardless of the quality of their backlink profile.

In the current digital landscape, On-Page SEO must be viewed as a **force multiplier**. While external factors—like backlink acquisition and overall domain authority—remain dominant drivers of search performance, a weak on-page foundation nullifies their impact. By committing to this comprehensive checklist, practitioners ensure their content is not only seen by traditional search engines but is also readily understood, retrieved, and prioritized by the next generation of AI search models. This strategic approach moves the page from merely existing to **actively performing**, securing its place as an authoritative source in the ever-evolving ecosystem of digital information.