The convergence of artificial intelligence (AI) and cybersecurity is no longer a futuristic concept—it is the defining reality of modern digital defense. In the near future, AI, particularly systems based on machine learning (ML) and deep learning (DL), will transition from being a supplementary tool to an indispensable core element of every organization’s security infrastructure. For decades, human involvement has been considered the essential, indispensable linchpin of cybersecurity. While expert human oversight remains critical, machines are rapidly proving their ability to outperform human specialists in certain high-volume, high-velocity tasks, fundamentally reshaping the defensive landscape.

I. Understanding the Foundational Technologies

The technological advancements driving this shift can be broken down into three core, interconnected disciplines. A detailed understanding of these distinctions is vital for grasping their current and potential role in defense:

- Artificial Intelligence (AI): At its broadest, AI aims to give computer systems the responsive, problem-solving, and cognitive capabilities analogous to the human mind. It is the overarching field that governs the goals of autonomous decision-making and intelligent action. In a cybersecurity context, “true” or strong AI would be capable of autonomously analyzing a novel attack, developing a countermeasure, and deploying it without prior human programming or training on that specific threat pattern. This level of autonomy is still largely aspirational.

- Machine Learning (ML): ML is a sub-field of AI. It leverages existing behavioral models to shape decision-making based on past data and insights. Instead of being explicitly programmed to perform a task, ML systems are trained using vast datasets. The system develops statistical models to recognize patterns, make classifications, and predict outcomes. For instance, in cybersecurity, an ML algorithm might be trained on millions of examples of benign network traffic and thousands of examples of malware traffic. It learns the **features** (like packet size, destination, frequency) that distinguish the two. However, ML still requires human intervention (or reinforcement learning feedback loops) to make necessary corrections and validate its interpretations. Machine learning is arguably the most relevant and deployed AI-based cybersecurity discipline today. Specific algorithms include Support Vector Machines (SVMs), Decision Trees, and K-Nearest Neighbors (KNNs).

- Deep Learning (DL): Deep Learning is a specialized sub-field of ML. It works similarly to machine learning but utilizes complex, multi-layered Artificial Neural Networks (ANNs) to process data. These networks—often containing dozens or hundreds of hidden layers—allow the system to automatically discover the features required for classification and prediction without human engineering. This inherent self-learning capability means DL can make adjustments independently and is highly effective for tasks involving unstructured data, such as image recognition (for Captcha breaking/analysis) or complex natural language processing (for sophisticated phishing detection). Currently, deep learning in cybersecurity often falls under the broader umbrella of machine learning in common discourse, but its growing prominence warrants separate recognition.

While the goal of fully autonomous AI remains distant, the incremental improvements driven by ML and DL are already profound, moving defense steps toward complete automation that were previously far beyond human capability.

II. The Cybersecurity Challenges ML and AI Are Designed to Solve

To appreciate the utility of AI technologies, one must understand the current, systemic limitations plaguing human-centric cybersecurity operations. AI/ML solutions are engineered to alleviate these five critical pain points:

1. The Ubiquitous Human Factor in Configuration

The uncomfortable truth is that human error remains the leading cause of security breaches, accounting for an estimated 95% of all cybersecurity vulnerabilities. Even a large, well-trained team of IT specialists struggles to properly configure increasingly complex network architectures. Computer security is constantly evolving, with new frameworks, patches, and integrations appearing daily. Configuring systems—especially when new internet infrastructure, such as cloud computing, must be built on top of legacy on-premises structures—is a monumental task.

Manually assessing the reliability and security of these configurations is incredibly labor-intensive. IT staff must juggle endless updates and compatibility checks with their daily operational tasks. Adaptive, intelligent automation tools alleviate this by continuously monitoring configurations against a known secure baseline, flagging deviations, and, in advanced systems, automatically implementing necessary parameter changes. This continuous, automated compliance vastly reduces the attack surface created by misconfiguration.

2. Inefficiency in Repetitive and Scaled Tasks

Manual labor efficiency is a core challenge in a domain demanding absolute precision and endless repetition. Human operators cannot replicate manual processes exactly the same way every time, particularly in a dynamic environment where systems, users, and threats change constantly. Tasks like customizing corporate endpoints are particularly labor-intensive. After initial provisioning, IT specialists frequently have to revisit devices to correct configurations, update settings, or manually triage issues that cannot be handled remotely.

Furthermore, when human employees are tasked with responding to threats, the scale of that threat can change instantly. The minute a security analyst is slowed down by an unforeseen issue or complexity, a system based on AI and machine learning can act with the speed of computation, minimizing delay. ML is uniquely suited to handle massive, repetitive data analysis and policy enforcement at a scale humans cannot match.

3. Alert Fatigue and Loss of Focus

The “bigger is better” approach to security often leads to alert fatigue. The more complex and multi-layered a security system becomes, the larger the attack surface and the higher the volume of automated security alerts. Security Information and Event Management (SIEM) systems can generate thousands of alerts per day. IT professionals must analyze these individually, often chasing false positives or low-priority signals to find the solutions and take action.

The sheer volume of signals makes decision-making a daily, draining problem for cybersecurity teams. This workload often forces teams to focus solely on the most pressing issues, pushing secondary, but still critical, tasks to the background. AI-powered systems address this by applying advanced clustering and classification techniques. They can group similar threats, prioritize them based on real-time organizational risk, and even automatically label signals, significantly reducing the cognitive load on human analysts and ensuring they focus their skills on high-level strategic threats.

4. Slow Threat Response Time

Threat response time is arguably the most important metric in cybersecurity effectiveness. Attacks are increasingly moving from exploitation to deployment at machine speed. Historically, pre-automation attackers might have spent weeks manually scanning vulnerabilities. Unfortunately, technological innovation is not exclusive to defense. Automated cyberattacks are now common, with advanced ransomware campaigns capable of successfully compromising a network in as little as half an hour.

Human response times—even to known attack types—are fundamentally slow compared to computational speed. This disparity leads many security teams to focus more on remediating the consequences of successful attacks than on preventing them. ML technologies can extract attack data, group it instantly, and prepare it for analysis. They generate reports with actionable, recommended steps to limit further damage and prevent lateral movement, effectively compressing the “dwell time” of an attacker from months to minutes.

5. Identifying and Predicting Novel Threats

The final factor affecting response time is the ability to identify and anticipate novel threats. New attack types, previously unseen behaviors, and entirely new tools often confuse human specialists, causing significant response delays. Worse, less visible threats like subtle, persistent data exfiltration can go undetected for extended periods. According to industry breach reports, the average time to identify a data breach is months, with additional months required for full containment and remediation.

The constant evolution of attacker technologies, including the emergence of zero-day attacks, demands defenses built on adaptive intelligence. Fortunately, cyberattack methods are rarely invented entirely from scratch; they are often based on the tactics, platforms, and source code of past attacks. Machine learning thrives on this consistency. ML software can compare a new threat’s features to the accumulated knowledge of the existing threat base, quickly identifying commonalities, predicting new threat vectors, and drastically reducing the time required to develop an effective countermeasure.

III. Technical Applications: How ML Drives Cybersecurity Operations

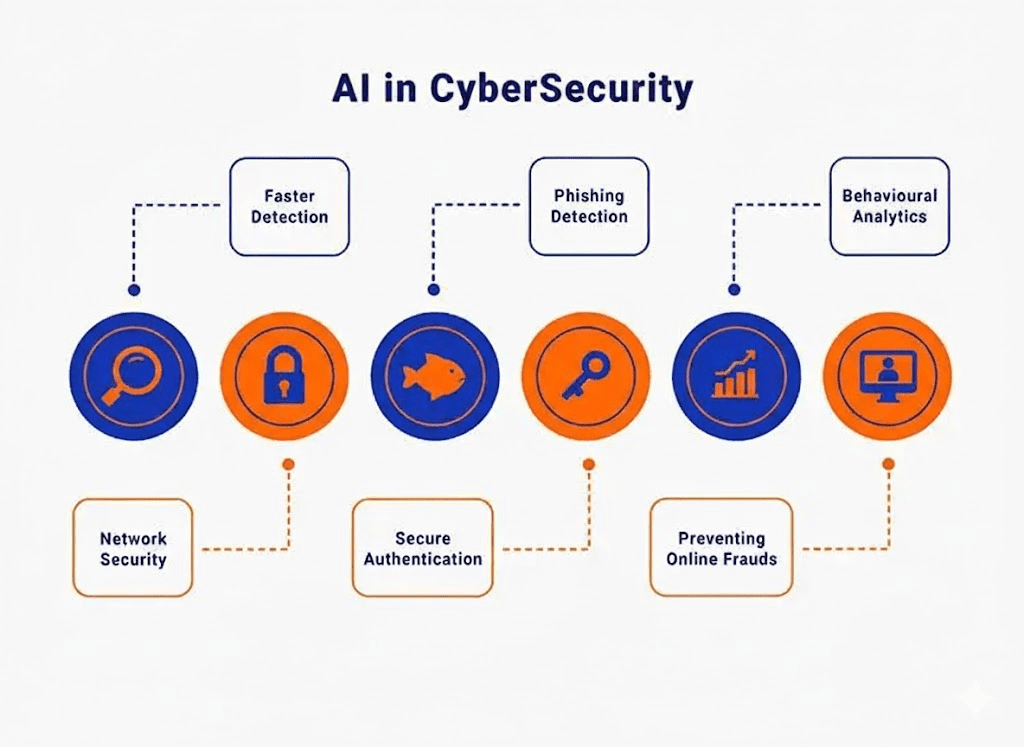

Machine learning-based security solutions represent the most powerful AI-based tools in cybersecurity today, focused intensely on accuracy and pattern recognition. While “true” AI seeks a natural, autonomous response, ML aims to find the optimal solution to a specific task based on available data. The core capabilities of ML in a security environment include:

A. Core Data-Processing Functions

- Data Classification: This assigns data points to specific categories based on predefined rules or learned patterns. For security, this is fundamental for creating attack and vulnerability profiles, enabling an immediate, automated response based on the data’s classification (e.g., flagging a file as “high-risk executable”).

- Data Clustering (Unsupervised Learning): This technique combines values filtered out during classification into clusters with common or, more importantly, *atypical* characteristics. Clustering is critical for analyzing novel attack data for which the system has no prior preparation. It helps determine the attack vectors, the vulnerabilities exploited, and the lateral movement patterns without requiring labeled examples.

- Predictive Forecasting: This is the most advanced ML-based process. By evaluating existing and real-time data sets, the model can estimate the probability of potential outcomes. Forecasting forms the basis of many predictive endpoint solutions used to build threat models, prevent financial fraud by monitoring transaction anomalies, and protect against data leaks by anticipating high-risk user actions.

B. Advanced Use Cases

The integration of ML moves beyond mere detection into proactive defense management:

- Behavioral Biometrics and User Security Profiles (UEBA): ML creates individual employee profiles based on their typical user behavior—everything from login times and locations to mouse movements and subtle keystroke dynamics (the speed and rhythm of typing). Any statistically significant deviation from this learned normal profile, no matter how subtle, is flagged. This model can detect unauthorized users or compromised accounts by analyzing behavioral anomalies long before any malicious file is executed, significantly reducing the attack surface.

- Vulnerability Management and Patch Prioritization: Not all vulnerabilities are equally dangerous. Human teams struggle to prioritize patching thousands of known vulnerabilities. ML models take real-time factors—like asset criticality, current exploit availability, network exposure, and attacker motivations—to assign a true risk score, moving beyond simple CVE ratings. This enables security teams to focus scarce resources on the 1% of vulnerabilities that pose an immediate, critical threat.

- Next-Generation Phishing and Malware Detection: Traditional security relies on signature matching. Modern malware is polymorphic, constantly changing its code structure. Deep learning, especially recurrent neural networks (RNNs) and natural language processing (NLP), can analyze the *intent* and *structure* of both malicious code and sophisticated phishing emails. Instead of just looking for a known signature, the system identifies the statistical likelihood that a file’s execution path or an email’s language patterns are malicious.

- Security Orchestration, Automation, and Response (SOAR): ML is the brains behind SOAR platforms. When a high-priority alert is generated, ML recommends the most rational course of action, derived from analyzing thousands of past threat responses. SOAR tools can then automatically execute multi-step playbooks—such as isolating an infected endpoint, blocking a malicious IP address at the firewall, or resetting a user’s credentials—all based on the ML-driven recommended action, dramatically improving threat response time.

IV. Ethical, Regulatory, and Workforce Challenges for the Future

Despite its revolutionary potential, the mass adoption of AI and ML in cybersecurity faces significant hurdles that must be addressed to ensure responsible and effective deployment:

1. Data Privacy and Training Data Conflicts

Machine learning thrives on data. To build accurate, robust models that can distinguish between benign and malicious behavior, algorithms require numerous, diverse, and extensive data points. This creates a direct conflict with stringent data privacy legislation, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). The use of personal or personally identifiable information (PII) in training data can violate the “right to be forgotten” or clauses governing automated decision-making.

Potential solutions being explored include:

- Federated Learning: Training the model on decentralized, local datasets (e.g., on individual devices or corporate networks) and only aggregating the resulting model weights, rather than the raw data itself.

- Differential Privacy: Introducing controlled, random “noise” to the training data to prevent any single data point (and thus any individual’s PII) from being identified in the final model, while still maintaining overall statistical accuracy.

- Homomorphic Encryption: A highly complex cryptographic method that allows computation (training the ML model) to be performed on encrypted data without ever decrypting it.

2. The AI Security Paradox: Adversarial Attacks

Artificial intelligence is not invincible; it can be fooled. Adversarial attacks exploit the very mathematical vulnerabilities of ML models. A sophisticated attacker can introduce tiny, often imperceptible, changes to input data (e.g., slightly altering a malware file) that are designed to trick the model into misclassifying the input. For example, a file recognized as malicious might be slightly altered just enough to be incorrectly flagged as benign by the ML-driven antivirus, allowing the attack to pass straight through. Since attackers are also using AI to craft sophisticated phishing emails and generate strings of malicious code, the defense and offense are engaged in a critical AI-versus-AI arms race.

3. The Human-Machine Partnership and Talent Gap

AI will not replace cybersecurity jobs, but it will fundamentally redefine them. The current problem is a severe talent shortage. The industry needs more experts—often called “AI Security Engineers” or “MLOps Engineers”—who possess both deep knowledge of cybersecurity *and* advanced skills in maintaining, configuring, and interpreting ML systems. The effectiveness of any ML-based solution is critically dependent on personnel capable of ensuring the data used for training is unbiased, the model is properly tuned, and its interpretations are sound.

Teams of human specialists will remain an integral part of cybersecurity departments. Critical thinking, creativity, ethical judgment, and complex political decision-making—qualities neither machine learning nor current AI technologies yet possess—will remain vital. Therefore, AI and ML must be treated as force multipliers: a set of tools in the hands of the human cybersecurity team, allowing them to elevate their focus from routine triage to strategic defense and novel threat hunting.

V. 3 Critical Cybersecurity Tips Powered by AI and Machine Learning

To navigate this evolving landscape, organizations must adopt a forward-looking strategy that integrates these technologies thoughtfully:

- Invest in Keeping Your Technology Future-Proof: As threats become more sophisticated and automated, the potential damage from exploiting vulnerabilities caused by outdated technologies or manual processes increases exponentially. To mitigate risks, you must continuously upgrade. Leverage cutting-edge technologies, such as integrated endpoint protection solutions that employ advanced ML for behavioral analysis, to better prepare your organization for the rapid changes in the threat landscape.

- Augment Your Teams with AI and ML; Do Not Replace Them: Vulnerabilities will still exist, and no system on the market today is completely foolproof. Since even adaptive AI-powered systems can be fooled by sophisticated, adversarial attack methods, ensure your IT team is adequately trained to work with and support this new infrastructure. They must be experts in interpreting the ML output and overriding automated decisions when context or ethical considerations demand it.

- Regularly Update Your Data Processing Policy to Comply with Changing Legislation: Data privacy has become a global focus for policymakers. Because your security systems are now using sensitive data to train their models, you must update your data processing and retention policies to ensure you adhere to the most current regulations, specifically concerning the use of data in automated decision-making and data anonymization requirements.

The future of cybersecurity is a synergistic partnership between human ingenuity and artificial intelligence. By leveraging the speed and scalability of ML, organizations can address the critical challenges of complexity, speed, and volume, securing the digital perimeter for the next generation.

Frequently Asked Questions About AI and Machine Learning in Cybersecurity

How exactly can AI be used in cybersecurity?

AI in cybersecurity is primarily used for ultra-fast, large-scale data processing and autonomous pattern recognition. This enables systems to monitor, detect, and respond to all types of cyberthreats in virtual real-time. Response processes can be partially or fully automated using SOAR tools based on information generated by the AI system (e.g., isolating an infected system), meaning threats can be contained anytime and anywhere, often before human analysts are even aware of the incident.

What are the measurable benefits of AI in cybersecurity applications?

The benefits are quantifiable and centered on efficiency and scale. Artificial intelligence is capable of detecting and remediating threats orders of magnitude faster and across a greater volume of data than the most skilled security professionals. This speed is achieved through AI’s ability to rapidly analyze massive data sets, detect minute anomalous patterns and trends, and automate repetitive processes that are otherwise time-consuming and prone to human error. This efficiency not only improves the overall security posture but also frees up valuable time for security teams, allowing them to focus their human skills on proactive threat hunting, strategic planning, and novel problem-solving.

What are the most significant risks of relying on AI in cybersecurity?

The risks are multi-faceted. First, AI systems are built on historical data, making them inherently less effective at detecting truly new, novel, or emerging threats until they have been exposed to examples of them. Second, AI is not yet robust enough to operate completely independently; human oversight remains essential for ethical and sound security decisions. Third, there is the critical risk of **adversarial machine learning**. As noted previously, attackers can use their own AI or subtle modifications to exploit the mathematical weaknesses in the defensive AI model, causing it to misclassify a malicious file as benign. It’s also important to remember that AI is a dual-use technology, and cybercriminals are increasingly using it to craft sophisticated phishing emails and generate malicious code, making defense ever more crucial.

Will AI completely replace human cybersecurity jobs?

No. AI will not completely replace cybersecurity work, but it will certainly lead to a fundamental rethinking of what human security professionals should do. Many repetitive, high-volume, and routine tasks—such as triage, logging, and initial response—will be handled by AI and automation technologies. This shift requires humans to move into higher-value roles, focusing on governance, managing and overseeing the AI tools, ensuring they operate correctly, checking for bias in automated decisions, and providing the creative, critical thinking necessary to counter novel threats.